Uploading and Storing Data

This section covers how data enters into the DoT Platform and is then stored, along with details on the security practices around data and how it is stored and retrieved.

DoT receives data through two primary mechanisms:

Push: Data is pushed into the DoT Platform via external API’s.

Pull: Data is pulled from a connected system on a regular schedule.

Push: Data Upload

Access Token

The process of uploading data into the DoT system is facilitated through an HTTP POST operation. To utilize this upload operation, a tenant user must obtain an upload token, which can be generated within the DoT Web UI.

Upload tokens are created with an expiry date. On this date, the token will no longer be able to be used to upload data to DoT. At the expiry date, the token is marked internally as expired and must be replaced by a new token. Typically, upload tokens are created with an expiry date 1 year from the date of the creation of the token. Upload tokens can also be listed and revoked at any time by a tenant owner. Revoking a token will also result in the token no longer being able to be used to upload data to DoT.

It is important to note that each upload token is associated with a specific DoT dataset. Therefore, uploading data to different datasets within DoT requires using corresponding matching tokens for proper authorization and access control.

CI/CD Integration

CI/CD integration is made possible by utilizing upload tokens and the upload REST endpoint. To establish a functional CI/CD pipeline, it is necessary to configure the token as a secret and utilise a windows script (usually a curl command) for uploading data to DoT. The Planit DoT team will assist the project on these scripts and will provide ongoing support for these scripts.

Pull: Connectors Access Control

A connector in DoT signifies a “pull” connection to a data source. For example a Pull from a Jira instance. The data source associated with a connector must provide an API that permits DoT to retrieve information from the source system on a regular basis.

When configuring a new connector, users have the option to include an API token in the connector settings. This token serves as authentication for DoT requests made to the source system.

To ensure security, these connector tokens are stored as part of the tenant configuration in DynamoDB. As outlined in this document, the information for these tokens is stored with encryption both at rest and in transit.

Data Classification

The following is a table of the information we capture in DoT and the classification of the data as well.

| Type of Information | Classification |

|---|---|

| Project Information | Public |

| Technology | |

| Client name | |

| Client project names | |

| Software Delivery | Restricted |

| Epics | |

| Stories | |

| Test Cases | |

| Test steps | |

| Test data (No production data) | |

| Results of executions | Restricted |

| Results | |

| Environment | |

| Defects | |

| User Information Stored | Restricted |

| Names | |

| Work email addresses | |

| App Store Information: Public information | Public |

| Ratings | |

| Comments |

Data Segregation

In DoT, data is segregated by tenant during the processes of data ingestion, transformation, and aggregation. This segregation ensures that each tenant’s data remains separate and distinct from others.

Additionally, both documents stored in S3 and OpenSearch are grouped by tenant, providing further segregation of queries that can be performed on these documents. This data segregation is implemented to ensure that tenant users can only access and interact with their own tenant’s information, maintaining confidentiality and preventing unauthorized access.

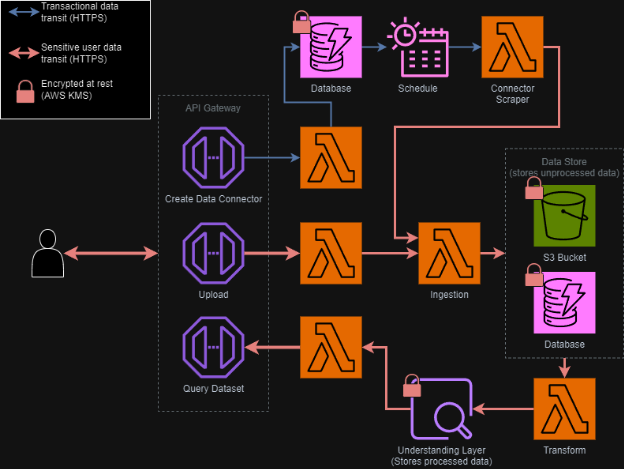

Security Flow Diagram

Below is a diagram explaining the flow of data through the DoT System combining the front-end, back-end, and API’s. It shows the flow of data through DoT (ingest-store-transform-query) and where it is stored throughout that flow.

Dynamodb, s3, and OpenSearch store user data with KMS service default keys

API users must use HTTPS, and all AWS API calls transferring data use HTTPS also, and additionally are signed with AWS Signature V4 to prevent tampering.

The red line is user-uploaded data (i.e. Jira/JUnit). The blue line represents DoT transactional data (connector definitions).

Security measures

Security measures for DoT:

-

Regular security review & penetration test.

-

Encryption at rest for all storage resources.

-

Obfuscated organization names: customers are referred to by a unique ID.

-

Automated security analysis for backend & frontend software dependencies,

-

Secure auth tokens with scoped permissions, particularly for automated data uploads.

-

AWS developer access is restricted by SSO with MFA with auditable access roles.

-

Production resources are in a separate, isolated AWS account. Developers cannot access production resources by default.

-

GitHub developer access is restricted by SSO with MFA.

-

Static code security analysis performed on all changes for the backend data platform.

-

All builds are reproducible via an infrastructure-as-code approach and configuration drift is checked via a daily process to ensure security configurations are consistent and do not change.

-

Aurora Postgres instance is located in a private subnet of the DoT VPC and cannot be accessed publicly. The instance is password-protected. Access is permitted only via AWS Systems Manager, enforcing that AWS access policies are required to access the database instance.

-

Managed WAF is in place to prevent DDOS and other attacks

-

AWS DOT tenant is completely separated from other Planit information servers with different accounts created for the DOT Development Team.